Recently, I mentioned to my wife that I needed new skis for this winter. Her response? “Define Need.” When it comes to collecting Encounter Data for CMS, perhaps I should consider sending my wife to Baltimore to help smooth things out.

If you have not heard of Encounter Data Processing for CMS is you could go here or just go ahead and skip this article entirely.

So while health plans have been busy for more than two years trying to comply with EDPS and prepare to switch over from RAPS (Risk Adjustment Processing System) , some involved with the process have lost sight of why we are doing this in the first place. CMS isn’t out to make things more difficult or to simply to see how high plans will jump. EDPS exists to settle some issues that can’t be addressed without more complete data. The problem is that data collection requirements can easily get out of hand.

Background: A Disagreement

In 2009, Medicare Advantage cost CMS roughly 14% more per patient than Fee For Service (FFS) patients. In 2010, that number dipped to 9% more, but still represented billions of dollars in additional cost to Medicare. The Medicare Advantage Organizations (MAOs) have pointed out that they have sicker patients on average and provide more services than FFS patients receive. CMS claimed that since MAOs are paid a Risk Adjustment Factor (RAF) based on what is wrong with patients instead of on services they provide like in FFS, they are simply better at reporting than doctors who see FFS patients. In fact, there is already an adjustment to RAF for the effect of coding intensity.

Measuring outcomes such as re-admission rates or patient satisfaction show MAO patients are better off than in FFS Medicare. MAO plans also claim that they do a better job of managing complex conditions such as diabetes and that costs money. Since current reporting (RAPS) does not show all the steps taken to provide the care, there is no way to reconcile whether CMS or the MAOs are right – or even who is “more” right.

Reasons and Realignment

To sort out how to fix the model in a fair way, EDPS uses the full data set of an 837 claim file as the source data instead of the 7 fields or so that are found in RAPS. Essentially, if CMS can get a picture of not only what is wrong with the patients today (like in RAPS) but also, what services were provided in the course of care, they can try and reconcile the model. Are the patients truly more sick on average? Are the MAOs actually being good stewards of the funds they are given and providing equal or even more care than a FFS patient gets? To get to the bottom of this, they would need to get the following information:

To sort out how to fix the model in a fair way, EDPS uses the full data set of an 837 claim file as the source data instead of the 7 fields or so that are found in RAPS. Essentially, if CMS can get a picture of not only what is wrong with the patients today (like in RAPS) but also, what services were provided in the course of care, they can try and reconcile the model. Are the patients truly more sick on average? Are the MAOs actually being good stewards of the funds they are given and providing equal or even more care than a FFS patient gets? To get to the bottom of this, they would need to get the following information:

1. Clear understanding of services rendered – what are all the things that are being provided to the patients in an MAO plan? With this data, a patient with the same exact condition can be compared from MAO to FFS to determine the level of care received.

2. Complete data – every visit, procedure, test etc. must be submitted rather than the subset of risk adjustable data that is found in RAPS. In RAPS, submitting additional instances of the same diagnosis really didn’t do anything to the RAF calculation. To be able to compare utilization across the models, care provided that is unrelated to HCCs and RAF also must be submitted in total.

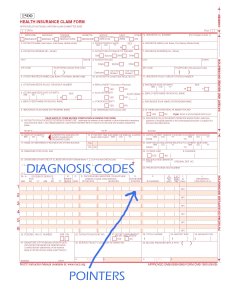

In order to make valid 837 files for submission to CMS, every encounter must include Member ID info, Provider Identifiers for both Billing and Rendering, and service line information such as DOS, CPT, Modifiers, REV Codes, Specialties, POS and charges. The problem comes in with how to use this data once it is received by CMS.

Not Claims Processing

While I was not a party to any of the discussions behind how to implement EDPS at CMS, I imagine the reasons they went with outbound 837s as the model is that they already receive these today for FFS processing and perhaps that some state Medicaid systems collect 837s for their model today. The thought was probably that they could just take the FFS system that could already process 837s and modify it to take in encounter data for use in EDPS instead. The problem is that claims processing requirements don’t always line up with EDPS. It is easy to look back and say that collecting 835s that every MAO in America can already output and contains a clear record of what took place in the course of care would have been a better way to go, but that won’t help us here.

In FFS processing, certain data may be required in order to pay a claim. If the data is not present, the claim is denied. If a FFS provider wants to get paid, they will get the needed data and resubmit. With MAO plans however, there isn’t any requirement to follow FFS submission rules. If a plan wants to work with a particular doctor or facility their contract will dictate what needs to be submitted. For example, skilled nursing facilities (SNF) must submit 837 claims to CMS for FFS payment. Another SNF may work with MAO plans and submit claims via paper form which may not have all the data elements needed to make a valid SNF claim. If that MAO then tries to submit EDPS data showing the SNF encounters, they will be rejected due to missing data elements. The encounter certainly happened and the MAO paid the claim; there is nothing to “fix” in the system of record (e.g. claims system) to make it submittable to CMS. If data is made up to make it submittable, the head of the plan’s compliance efforts would likely be less than pleased to say the least. If the data is not submitted to CMS, utilization will seem lower than it actually is. Typically I refer to these types of claims as the “encounter grey zone”. These are claims that are correctly processed by the plan according to their business rules, and yet are unsubmittable to CMS.

In the above example, RAF scores would likely not suffer too greatly if at all. The direct impact is not felt because other encounters would likely be present to cover any related HCC diagnosis. Of course this is going to be a revenue department’s first concern at a plan. However, even if small numbers of encounters are unsubmittable at each plan, utilization across all plans will appear lower and therefore there will be an indirect but definite impact to plan payment when utilization is calculated by CMS and applied to the new reimbursement model.

One option, which would take a great deal of time and effort to come to fruition, would be to make sure the same rules that apply to CMS FFS submission are then followed by providers and then the plan’s claim system processing rules. While this is possible, it essentially means that CMS’s rules and system become a defacto way to enforce payment practices on MAO plans. There are a lot of attractive reasons to work with an MAO rather than FFS Medicare, but those reasons start to go away as MAOs have to add layers of rules and bureaucracy.

There is a lot of data in an 837. When you take into account the fact that all encounters must be submitted to CMS, plans are looking at 500-1000 times as much data as submitted under RAPS. While balancing claim lines for amounts claimed, paid, denied – not to mention coordination of benefit payments – is not a part of the stated goals of EDPS, balanced claims are needed to make a processable 837 file. Due to the nature of contracts and variability of services provided within identical CPTs, this data won’t likely proved statistically significant to CMS even if they are able to collect and data mine it.

Reexamine the stated goals of Encounter Data Collection

I am sure there is lots of data that would be nice to have for some data miner at CMS someday. Now that we are all quite far into this thing, there are certain things that would be painful to undo, however there is still an opportunity to take a step back and reexamine why we are doing this in the first place. In many cases, CMS is still running the submitted data through a system designed to pay or deny claims before it reaches their data store. This means a lot of edits and a lot of reasons why an encounter might reject. To their credit, CMS has turned a lot of edits off, but when the starting point was a full claims environment, there is still a long way to go.

If CMS were to reexamine the edits involved in the EDPS process, they would find it is in not only the plan’s best interest to turn off many edits, but their own as well. If an edit doesn’t fit the following criteria, it should be turned off.

- Can the member be identified? Doing a good job so far on this one.

- Can the provider be identified? After a positive NPI match, there should not be rejections for mismatched addresses, zip codes, names, etc. If it is a valid NPI and CMS still has rejections then the table CMS is using for this process MUST be shared with the plans so they can do look-ups prior to submission. Plans can’t be expected to guess this information. There are a lot of kinds of provider errors out there that need to be relaxed.

- Is it a valid 837 v5010? If the standard is not followed and the required fields according to the TR3 are not present, all bets are off. However, this may mean that certain fields should be able to be defaulted in the same way that Ambulance mileage / pick up and drop off defaults have been allowed. There are lots of segments and elements to the TR3 that are Situational unless your trading partner requires them. Most of these are just not required to realign the model.

Finally ask the following: Does a rejection indicate doubt the encounter happened, or that CMS doesn’t normally pay it? If an encounter / line doesn’t have a valid DOS, CPT, Unit where required, Modifier where needed, diagnosis code(s) then it may be unclear what happened and when. Barring that, the decision whether to accept the encounter data should be to accept. Whether CMS normally pays without that data in a FFS environment is irrelevant.

What do you think? I’ll monitor the comments to hear your thoughts.

Eventually, someone in Information Technology or Database Administration gets asked to extract data from a PHI rich line of business system or data warehouse but deliver it as de-identified data. Almost any data extraction approach allows for data to be masked, redacted, suppressed or even randomized in some way. This type of functionality can give us de-identified but often useless data for testing, analytics or development.

Eventually, someone in Information Technology or Database Administration gets asked to extract data from a PHI rich line of business system or data warehouse but deliver it as de-identified data. Almost any data extraction approach allows for data to be masked, redacted, suppressed or even randomized in some way. This type of functionality can give us de-identified but often useless data for testing, analytics or development.

Imagine you work at a health insurance company. Your title is “Claims Examiner” and you spend each day deciding if bills sent from doctors for the insurance company’s members should be paid. You must be sure the treatments match the diagnosis, the member is eligible for the payment and the amount being asked for is correct. This work is performed in a “Claims System”. Claims Systems are one of the first widespread uses of computers in business and have been around for 40 years. This is the lifeblood of a health insurance company and seemingly all their other systems are related to it. The data the Examiner uses to pay or adjust the bills doesn’t need to be obscured in any way because it is part of TPO (treatment, payment or health care operations).

Imagine you work at a health insurance company. Your title is “Claims Examiner” and you spend each day deciding if bills sent from doctors for the insurance company’s members should be paid. You must be sure the treatments match the diagnosis, the member is eligible for the payment and the amount being asked for is correct. This work is performed in a “Claims System”. Claims Systems are one of the first widespread uses of computers in business and have been around for 40 years. This is the lifeblood of a health insurance company and seemingly all their other systems are related to it. The data the Examiner uses to pay or adjust the bills doesn’t need to be obscured in any way because it is part of TPO (treatment, payment or health care operations).

A lot of questions are being asked about Medicare Advantage and Risk Adjustment lately, very likely due

A lot of questions are being asked about Medicare Advantage and Risk Adjustment lately, very likely due  For the purpose of this discussion, we’ll put aside the fact that CMS took an overly complicated and non-standard approach to submitting deletes via EDI. However, the store and forward approach makes things a lot harder even if you know exactly what to delete. The store and forward approach in a nutshell is get stuff (encounter data), and forward that stuff on once it is formatted as a message to CMS. Following this flow, what “stuff” is the system to “get” so that it is to be forwarded as a message letting CMS know it should delete that code? A new process needs to be created to look through existing submissions for things to delete. This process is needs to do complex matching and status queries to even have a chance to send a delete. But even if all that can be pulled off, what should be deleted?

For the purpose of this discussion, we’ll put aside the fact that CMS took an overly complicated and non-standard approach to submitting deletes via EDI. However, the store and forward approach makes things a lot harder even if you know exactly what to delete. The store and forward approach in a nutshell is get stuff (encounter data), and forward that stuff on once it is formatted as a message to CMS. Following this flow, what “stuff” is the system to “get” so that it is to be forwarded as a message letting CMS know it should delete that code? A new process needs to be created to look through existing submissions for things to delete. This process is needs to do complex matching and status queries to even have a chance to send a delete. But even if all that can be pulled off, what should be deleted?